I’ve been trying new tuning algorithms to find the optimum low number ratios to tune Bach chorales. James Kukula on the Facebook group Microtonal Music and Theory suggested I explore Simulated Annealing to find the optimum tuning. I asked my friendly local LMM running granite-code:20B for some advice, and it helpfully coded up the algorithm for me.

The basic idea is that you start with a cent value for each of the four notes in each chord, then change it until you reach a chord that scores well on an analysis function. The key trick of Simulated Annealing is that you start by probabilistically accepting non-optimum values for an interval instead of the very lowest number ratio. As you repeat the process, you gradually lower the temperature controlling how likely you are to reject the optimum. Eventually, after enough repetitions, you are only accepting the optimum choices. This avoids the situation where the first choice you make causes the options for subsequent choices to be bad. You want to avoid what are called local minima, suboptimal arrangements that you arrive at by blindly only accepting the optimum at the start. Sometimes if you start out sub-optimally for the first few intervals, the sum of all the ratios in an interval is lower.

In my case, I start with a tonality diamond to a variable limit. I’ve found through repeated grid searches that either the 31-limit or 47-limit produce the best scores. I split the 4-note chord into 6 separate intervals, then run a function that returns all the valid intervals that could be used for each of the six intervals. Valid means:

It is an interval in the tonality diamond, made up of all the intervals with numerators and denominators from 2 through the specified limit. If the limit is 5, for example, the diamond includes the ratios 3:2, 4:3, 5:4. As we increase the limit to 31, you are offered ratios like 31:30, 25:37, and other decidedly not low number ratios. I tried some low limits, like 15, and the results were poor.

Ensure that none of the selected intervals will change the underlying midi note value, the 12-tone equal temperament value of each note in the chord. If the ratio is too large, such that adding a cent value to the interval would round to a D sharp instead of a D natural, it is excluded from the returned value. I generally find 10-15 valid ratios to choose from.

I return a list of indices into the tonality diamond structure, each of which includes the ratio value in floating point, the cent value of the interval, and sum of the numerator and denominator of the ratio.

In this way the algorithm can tune a chord to cent values that represent low number ratios.

I then have the option of choosing from the list of valid ratios the one that has the lowest number ratio. Or not. Simulated Annealing starts out with a high temperature, then with each iteration, it lowers the temperature by a ratio. In my case, I did many grid searches and determined that starting with a temperature of 64.0, then multiplying it by a cooling rate of 0.998 with each pass, results in the lowest number ratios. I started out with temperatures of tens of thousands, and cooling rates closer to 1.0, but found that they did not improve the results.

I achieved better results by systematically rearranging the order of the notes in the chord, then ran the simulated annealing multiple times. This lowered the scores significantly. I use a python library called numpy, which includes a function called roll, which takes a 4-note chord and rearranges it. If I start with C E G A#, a roll of one returns E G A# C, two returns G A# C E, and so forth. I found a rolls of 0,1,2,3,4,5 for every chord produces the lowest scores. Even the simulated annealing gets stuck at local minima and simply running the same chord through the algorithm more than once, it picks a better arrangement.

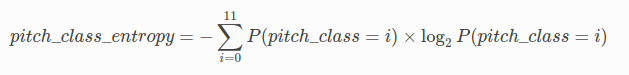

I score each chord by simply adding the sum of the numerator and denominator for each of the six intervals. This produces the score for the chord. I’ve been using this for several months now, running several different Bach chorales through the algorithm. I start with a set of midi values for each note in each chord, and convert it to a number from 0-11, plus a separate value for the octave. Then I run it through the annealing process which produces a 4-note chord in cents. What I noticed recently is that this is precisely what Harry Partch was getting at in his famous (to me) “One Footed Bride” chart.

Partch used this chart in his great book “Genesis of a Music”, page 135. He identifies ratios by several adjectives: Power, Emotion, Approach, and Suspense. But the important value is how far the ratio sticks out: low number rations like 3:2 and 4:3 stick way out and indicate strength; higher number ratios like 5:4 and 8:5 stick out less; still higher number ratios like 7:6 and 8:7 stick out even less. In other words, there is greater strength the lower number the ratio reduces to. That’s just what my scoring algorithm is doing: tuning each chord to the lowest number ratio possible for each chord. In honor of the Partch One-footed bride, I tuned Bach’s Wedding Chorales BWV154-164. Audio files are at the bottom of this post.

I started out in my algorithm search achieving average scores for the six intervals in a 4-note chord of around 60. This implies that on average, each chord used six intervals where the sum of the numerator and denominator for the interval was around 10. Imagine a chord consisting of [‘G♮’, ‘D♮’, ‘B♮’, ‘G♮’], which in midi is represented by [7, 2, 11, 7], once you remove the octave. If I choose the ratios [ 700 202 1086 700] for each of the four notes, I end up with the six intervals tuned to the following ratios. The first two numbers are the relative notes in the chord, 0 being the first, 1 the second, 2 the third and so forth. Python always starts with zero instead of 1. The first interval (0,1) is 4/3 with sums to 7. The second (0,2) is 5/4 which sums to 9. the third (0,3) is the same note in both cases, G♮’, the fourth (1,2) is 5/4 sums to 8. Do that for all six and you end up with a score of 40. (7+9+0+8+7+9=40). The score for this chord is therefore 40. Pretty good. Tuned to just, with the lowest number ratios:

[(0, 1, ‘ 498’, ‘ 4/3 ‘), (0, 2, ‘ 386’, ‘ 5/4 ‘), (0, 3, ‘ 0’, ‘ 1 ‘), (1, 2, ‘ 884’, ‘ 5/3 ‘), (1, 3, ‘ 498’, ‘ 4/3 ‘), (2, 3, ‘ 386’, ‘ 5/4 ‘)]

Do this for all the chords in a chorale, sum the numerators and denominators of all the six intervals in each chord and evaluate it based on the lowest possible score. When I started using the simulated annealing, with all 24 permutations of each chord, I began getting average scores around 55. Grid searches for the optimum hyperparameters of the algorithm reduced the averages to below 50 for most chorales. But the problem now is that it would take an hour or more to calculate the optimum.

I implemented several caching schemes, to speed up the interval ratio lookups, and scoring. I also use the numpy function called unique, which examines all the chords and eliminates any that are duplicates, returning just the unique ones, and a list of those removed so they can be restored. This eliminated 40% of the chords that had to be tuned.

By default, python only runs on a single CPU regardless of how many I have in my servers. I solved that by asking a new granite model, granite3.1-moe:3B, to suggest ways to parallelize the python. It came up with a brilliant solution which allowed me to exploit multiple cores in my servers.

I quickly discovered that most of my servers advertised multiple threads on Intel and AMD chips. But just because each core provided two virtual cores, it turned out that during CPU intensive processing only one was active at a time. My 12-core server that offered 24 threads took just as long when I parallelized it 12 ways as 24 ways.

The latest Intel chips have abandoned multi-threading, because the majority of workloads get very little benefit from it. I’m in the process of getting one built now, with 24 real cores. I was able to test it and found that it performed well at 3.7 gHz, tuning 10 chorales in 15 minutes across all 20 cores. I had to switch off turbo to 5.7 gHz because of heat problems. I’m working on solving that now.

The final result is a set of tunings for chorales that have very low number ratios. That means that I have lots of chords with tunings that are not in normal 12-tone equal temperament. That includes some with ratios like the third beat of measure 7 of bwv263, chord number 104, with a score of 107. This high score caused by tuning the notes [‘D♮’, ‘G♯’, ‘F♮’, ‘B♮’]. Here is measure 6 and 7:

104: [2, 8, 5, 11] [‘D♮’, ‘G♯’, ‘F♮’, ‘B♮’] [ 200, 782, 466, 1084] 107

104: [(0, 1, ‘ 582’, ‘ 7/5 ‘), (0, 2, ‘ 266’, ‘ 7/6 ‘), (0, 3, ‘ 884’, ‘ 5/3 ‘), (1, 2, ‘ 316’, ‘ 6/5 ‘), (1, 3, ‘ 302′, ’25/21’), (2, 3, ‘ 618’, ‘ 10/7’)]

It includes several relatively high number ratios: 7:5, 7:6, 25:21, and 10:7. But it’s just a passing tone that quickly resolves away from that diminished chord. Bach loves the tension that diminished chords produce. Think stacked minor thirds.

One of the problems with this method of tuning chords individually is that it picks the best cent value for each chord regardless of the surrounding chords. Notes can change cent values from one chord to the next, even though they are the same midi value. For example, in the two measure excerpt above, measures six and seven of bwv163, the first D eighth note in the soprano part of measure 6 has been chosen to have a cent value of 202, while the one that follows is at 200 cents. To resolve that change, I slide from 202 down to 200 over the duration of those 9 eighth notes in the chords. This is too small to notice. But the slides in measure three are more prominent, where I have to move a G♮ in the alto part from 700 cents all the way up to 749 cents. The measure starts with a G major chord, then quickly gets weird. But over the five separate chords from the beginning of the measure to the halfway point, the alto voice has to cover half a semi-tone. It’s subtle. I’ve slowed the performance down to make the movements less obvious.

Over the course of this chorale there are 183 separate slides, but with all the repetitions, they are all using these 9 glissandi:

glide# decimal cents ratio

1500 0.9988 -2 1

1501 1.0012 2 1

1502 1.0116 20 51/50

1503 0.9977 -4 1

1504 1.0287 49 36/35

1505 0.9857 -25 49/50

1506 0.9885 -20 49/50

1507 0.9834 -29 49/50

1508 0.98 -35 49/50

Csound has no trouble with slides.

To summarize, the key technologies brought to bear on this musical challenge were:

Code up a way to determine the valid intervals that could be used for each interval in the chord

Find the optimum tonality diamond limit value to reduce the average score: 31 and 43

Code up a scoring algorithm to evaluate chords for their use of low number ratios

Cache the results of calls to determine the valid intervals and chord scores. I achieved 98% cache hits on the ratio selection, and 88% on the scoring. This reduced the workload to accomplish the tuning.

Find a way to slide from one cent value of a note to another for notes that had the same midi value but different cent values. This took some special Csound coding.

Build a simulated annealing algorithm that could find the optimal chord tuning in cents based on low number ratios

Find a way to parallelize the algorithm so each core in the CPU could work on a different chord.

Determine the optimum rearrangement scheme to send chords with different arrangements through the simulated annealing function.

Compress the chorale so each unique chord would only have to be tuned once, no matter how many times it appeared in the chorale.

Send the chord through a mix of sampled brass and woodwind instruments from the McGill University Master Samples using Csound.

Here is BWV263 from the Wedding Chorales by J.S.Bach:

BWV257:

And BWV258: