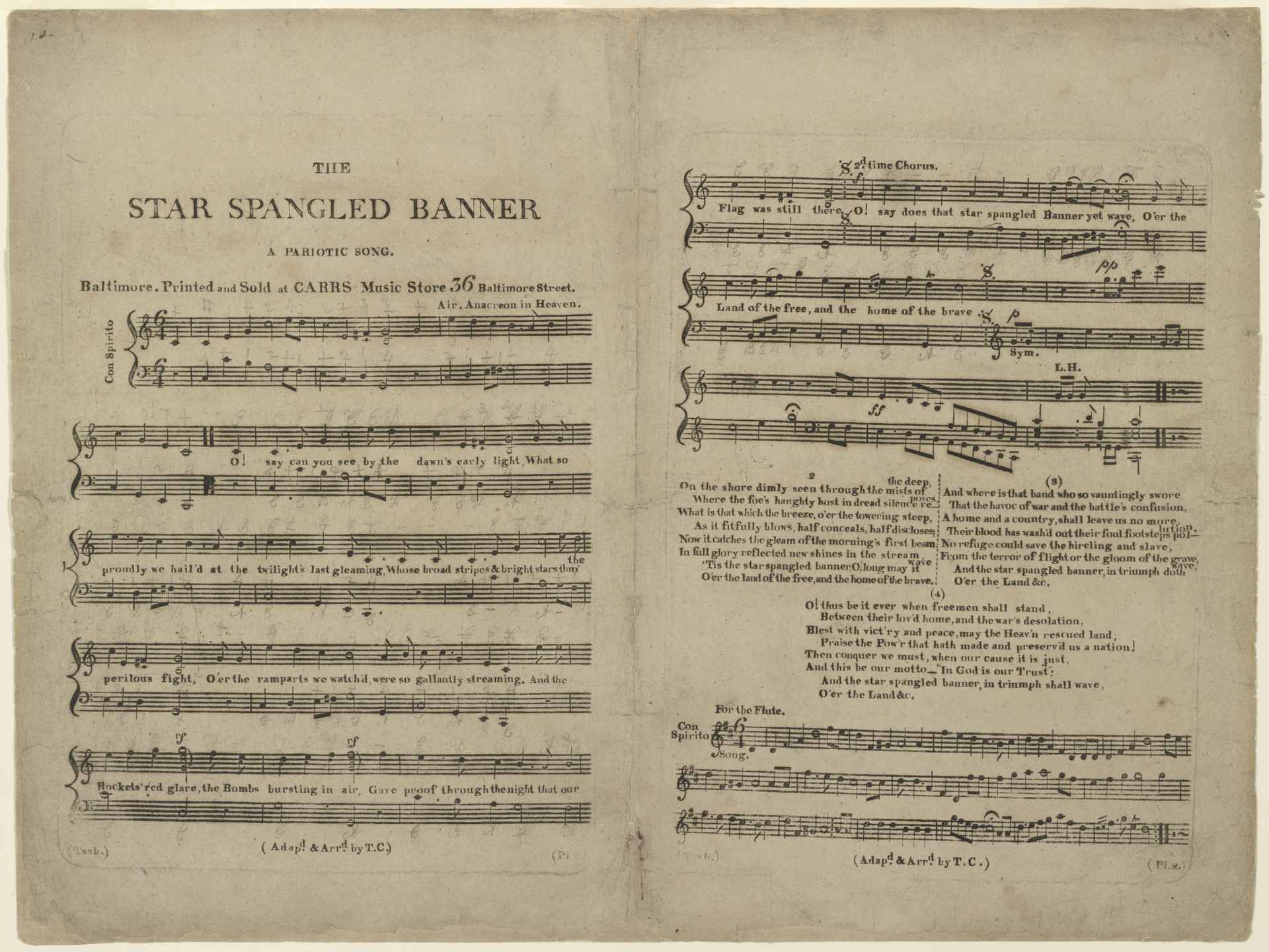

This post is going to trace the path that our national anthem took as it went through a neural network and probabilistic algorithms in its journey to produce some music. It started out as a midi file downloaded from the internet. Here is the first few measures as played on a quartet of bassoons with staccatto envelope realized in Csound.

I then turn it from a nice 3:4 waltz into a 4:4 march by stretching out the first four 1/16th note time steps to 8 time steps.

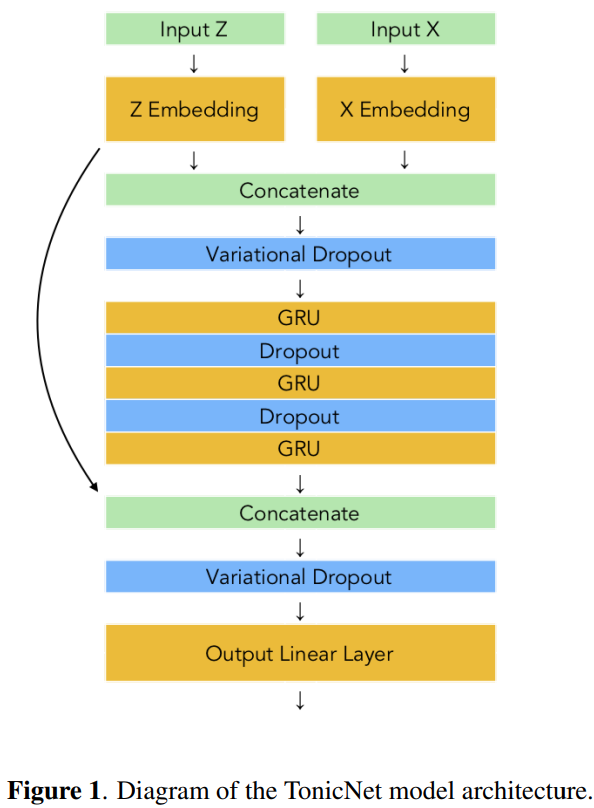

The MIDI files are transformed into a set of time steps, each 1/16th note in length. Each time step has four voices, soprano, alto, tenor, and bass, with a MIDI note number for every voice sounding at that time. Zeros represent a silence. The transformation into time steps has some imperfections. In the time step data structure, the assumption is that if the time step contains a non-zero value, it represents a MIDI note sounding at that time. If the MIDI number changes from one step to the next, that voice is played. If it is not different from its predecessor, it holds the note for another 1/16th note. In the end we have an array of 32 time steps with 4 MIDI note numbers in each, but some are held, some are played, and others are silent.

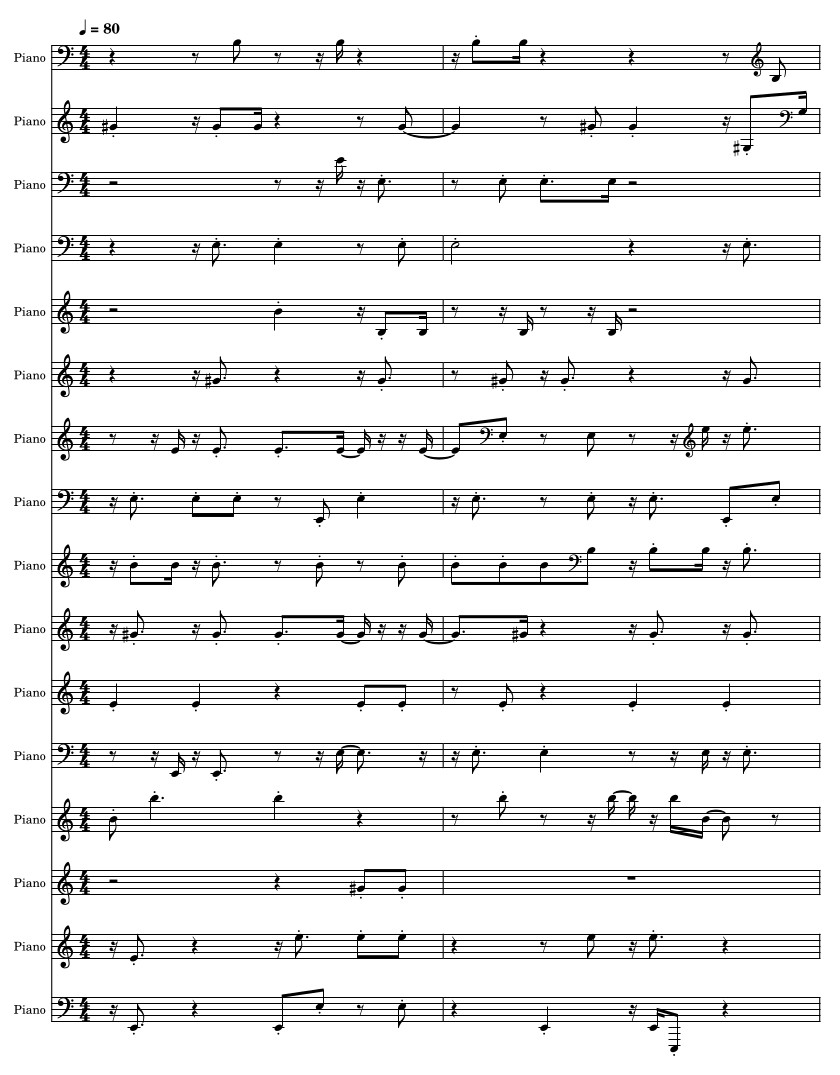

The next step is to take that march, and chop it up into seventeen separate 32 1/16th-note segments. This is necessary because the Coconet deep neural network is expecting four measure chorale segments in 4:4 time. The result is a numpy array of dimension (17 segments, 4 voices, 32 time-steps of 1/16th note each). I then take that structure, and feed each of the seventeen segments into Coconet, masking one voice to zeros, and telling the network to recreate that voice as Bach would have done. I repeat that with another voice, and continue to repeat it until I have four separate chorales that are 100% synthesized. Here is one such 4 part chorale, the first of four.

And another, the last of the four, and so the most different from the original piece.

I create about 100 of these arrays of 16 voice chorales (four 4-voice synthetic chorales), and pick the ones that have the highest pitch entropy. That is, the ones with the most notes not in the original key of C major. It takes about 200 hours of compute time to accomplish this step, but I have a spare server in my office that I can task with this process. It took this one about a week to finish all 100.

Then I take one of those result sets, and put it through a set of algorithms that accomplish several transformations. The first is to multiply the time step voice arrays by a matrix of zeros and ones, randomly shuffled. I have control of the percentages of ones and zeros, so I can make some sections more dense than others.

Imagine a note that is held for 4 1/16th notes. After this process, it might be changed into 2 eighth notes, or a dotted eighth and a sixteenth note. Or a 1/16th note rest, followed by a 1/16th note, followed by a 1/8th note rest. This creates arpeggiations. Here is a short example of that transformation.

That’s kind of sparse, so I can easily double the density with a single line of python code:

one_chorale = np.concatenate((one_chorale, one_chorale),axis = 0) # stack them on top of each other to double the density

I could have doubled the length by using axis = 1

That’s getting more interesting. It still has a slight amount of Star Spangled Banner in it. The next step will fix that.

The second transformation is to extend sections of the piece that contain time steps with MIDI notes not in the root key of the piece, C major. I comb through the arrays checking the notes, and store the time steps not in C. Then I perform many different techniques to extend the amount of time on those time steps. For example, suppose the time steps from 16 to 24 all contain MIDI notes that are not in the key of C. I transform the steps 16 through 24 by tiling each step a certain number of times to repeat it. Or I might make each time step 5 to 10 times longer. Or I might repeat some of them backwards. Or combine different transformations. There is a lot of indeterminacy in this, but the Python Numpy mathematical library provides ways to ensure a certain amount of probabilistic control. I can ensure that each alternative chosen a certain percentage of time.

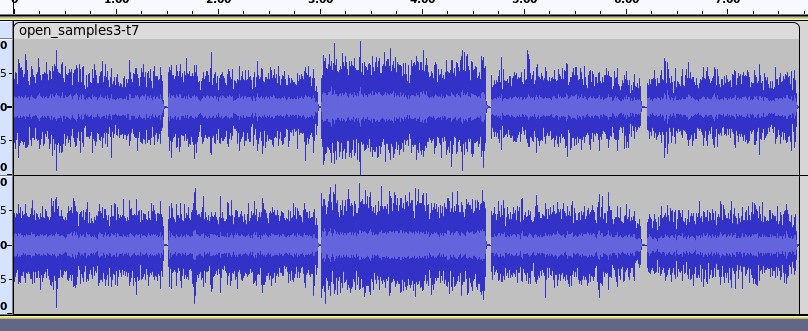

Here is a section that shows some of the repeated sections. I also select from different envelopes for the notes, with 95% of of them very short staccato, and 5% some alternative of held for as long as the note doesn’t change. The held notes really stick out compared to the preponderance of staccato notes. I also add additional woodwind and brass instruments. Listen for the repetitions that don’t repeat very accurately.

There are a lot of other variations that are part of the process.

Here’s a complete rendition: