Today’s addition includes some arpeggios based on the outputs of the coconet chorale building model, as modified by me. I made this by building a set of masks that would silence some of the notes in the arrays. My current data structure consists of 16 voice lines. Each line contains 264 slots, each a 1/16th note in length. If the slot contains a non-zero number, then that note will play. If it was just played a slot ago, then it is held over. If I mask a line, some of the positive values become zero. If I silence voice lines in sequence, such as silencing the soprano, alto, and tenor, then they don’t play. By carefully timing the masks, I create some arpeggios.

I also spread the pianos out in the stereo field, so that you can each each one more clearly.

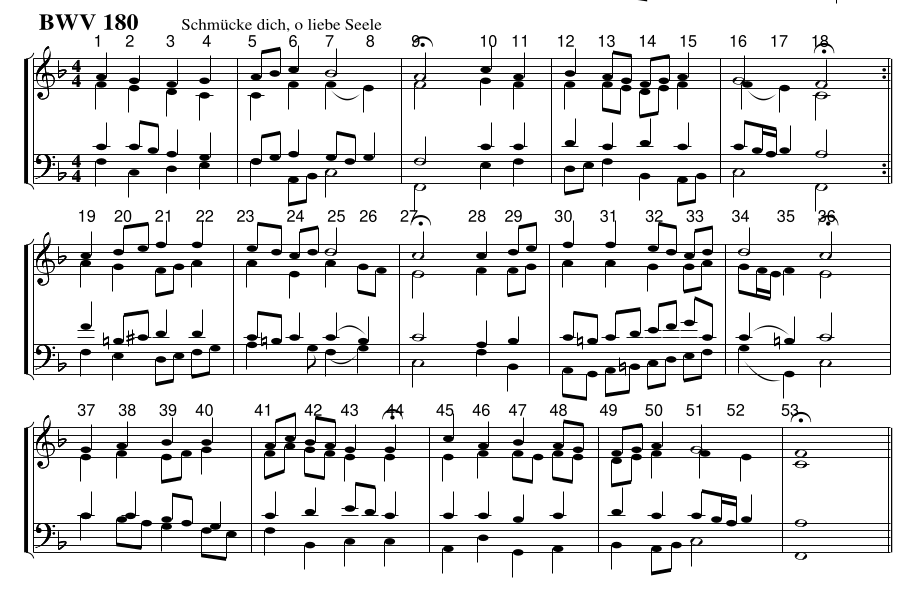

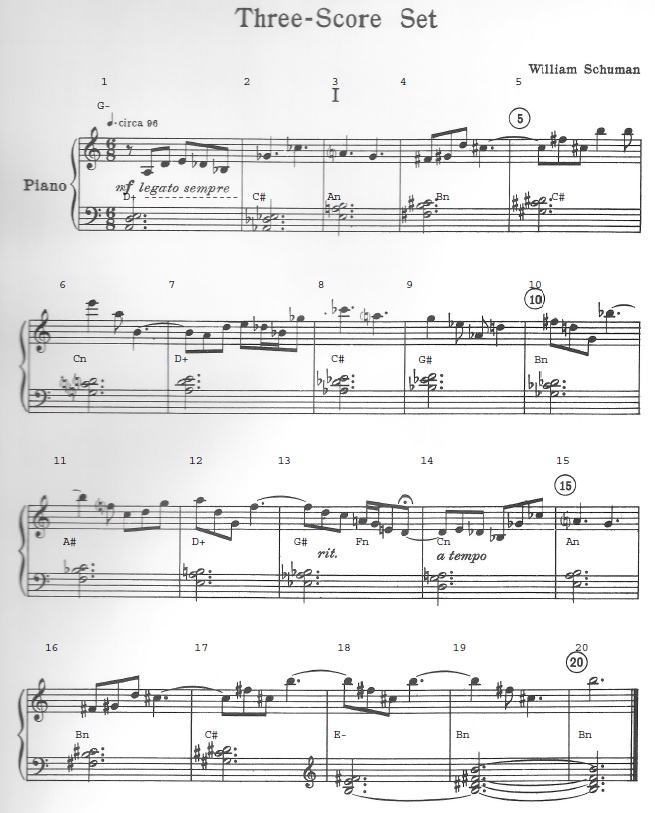

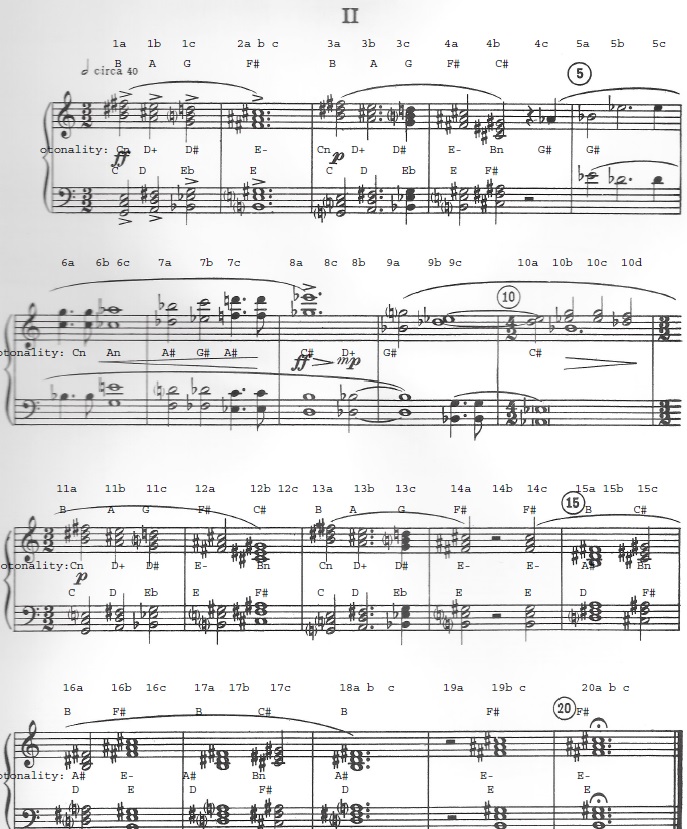

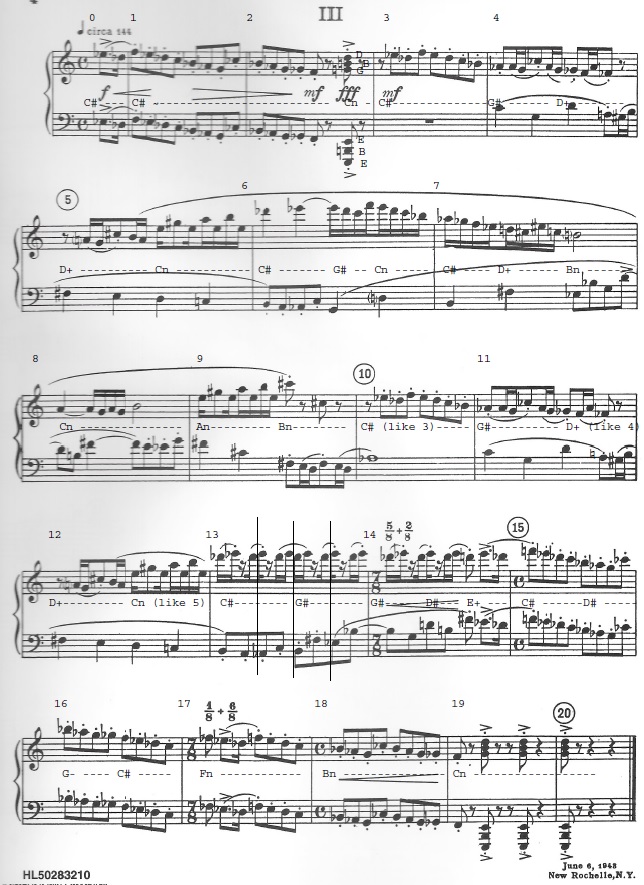

I created a bunch of chorales, and ranked them by various metrics from a python library called muspy. The one called saved_chorale329 had the highest scale consistency score.

Here is the arpegiation code in python.

def arpeggiate(chorale,mask):

for i in range(0, chorale.shape[1]// mask.shape[1],3): # skip every third one.

start = i * 8

end = (i+1) * 8

chorale[:,start:end] = mask * chorale[:,start:end]

return(chorale)

numpy_file = 'numpy_chorales/saved_chorale329.npy'

chorale = np.load(numpy_file)

mask = np.zeros((16,8))

# 1st part

mask[0,] = [0,0,0,1,1,0,1,1]

mask[1,] = [0,0,1,1,0,1,1,1]

mask[2,] = [0,1,1,0,1,1,1,0]

mask[3,] = [1,1,1,1,1,1,0,1]

# 2nd part

mask[4,] = [0,1,1,1,0,1,1,1]

mask[5,] = [0,0,1,1,0,0,1,1]

mask[6,] = [0,0,0,1,0,0,0,1]

mask[7,] = [1,1,1,1,1,0,1,0]

# 3rd part

mask[8,] = [0,0,1,1,0,1,1,1]

mask[9,] = [0,1,1,1,0,0,0,1]

mask[10,] = [0,0,0,1,0,0,1,1]

mask[11,] = [1,1,1,0,1,0,1,0]

# 4th part

mask[12,] = [0,0,0,1,1,0,1,1]

mask[13,] = [0,0,1,1,0,1,1,1]

mask[14,] = [0,1,1,0,1,1,1,0]

mask[15,] = [1,1,1,0,1,1,0,1]

np.save('arpeggio7.npy',arpeggiate(chorale,mask))